The Importance of Data Engineering

Data engineers play an extremely critical role in developing and managing enterprise data platforms. There is a rising need for data engineers who build ETL (Extract, Transform and Load) pipelines to transform raw data into usable information.

Data pipelines are necessary for writing data-driven models, presentations and conclusions in a business environment. Without them, you will waste a lot of time trying to figure out faulty connections and also identify the innovative business opportunities coming your way.

Data engineering is a cornerstone of data science and analytics, and has important implications for an enterprise’s data landscape.

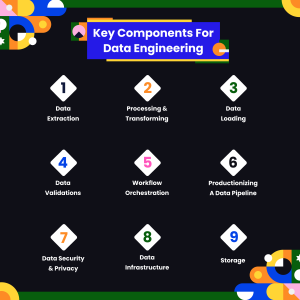

Data engineering is a very complex process and can contain many phases, but there are some key components that are needed in order to make sure the process moves smoothly. You should always have an idea of what stage to work on at any given point in time.

Key components for Data Engineering

Data Extraction:

Data extraction comes first. We use data sources to extract information and start building your data pipeline. You can add different types of data stores, such as a Data Lake, Data Warehouse or API. You can also store and access live events with Kafka. Data can be extracted at different speed levels depending on the requirements—batch, micro-batch, and streaming. Data engineers need to understand the pattern before designing a data pipeline.

Processing and Transforming:

Once we’ve parsed your data, it will be processed and transformed so that it can be used to fuel our ML models or BI dashboards.

Depending on the data latency/volume and computing framework, servers can be configured to process certain types of data. For example, if the volume of data is much higher than what a single node can handle, server engineers will work with large cluster computing frameworks.

Data Loading:

Once data has been processed, transformed, and is ready to be used by the downstream consumers it needs to be loaded into a specific data sink location where end users & applications have access.

There are various ways to load the data into an AWS instance, depending on the storage setup. Some ways include Append, Insert, Update or Overwrite. These depend on the specific way data is uploaded from the source system. For example, this will tell you about log-based Change Data Capture (CDC) for SQL servers, which is a pattern for incrementally loading data. With this approach only the changes are captured through database logs.

Data Validations:

Data moving through the different stages of life, data getting extracted, transformed and loaded needs to be checked and validated for quality. This is why data engineers are required to do a lot more than just extract, transform and load data. They need to conduct quality checks and profiling throughout the entire pipeline, which can be time-consuming. This is why it’s important to consider an AI solution that can help you automate this process.

For example, data engineers can design a schema before populating the data warehouse. They can restrict constraints on the key columns to make sure they match what is desired in the schema and to restrict them accordingly. There are a number of open-source Python libraries for data quality checks, such as Great Expectations, Pandera & Deep/PyDeequ. These provide an open standard and shared constraints for data verification. A lot of data providers, like Databricks, offer ETL platforms (such as DLT) and let you look at quality checks while defining your data pipelines.

Workflow Orchestration:

Data pipelines are often not linear – you’ll find that one pipeline can have many data sources and jobs. It’s important to be aware of these dependencies and make sure that the data flows are scheduled and run accordingly.

Workflow orchestration tools not only execute and manage data pipelines, they also provide functions such as retries, logging, caching, notifications and observability. Therefore, it is essential for data engineers to at least know about one workflow orchestration tool. Apache Airflow and Prefect are open-source frameworks that can be used for these purposes. Argo is a new entrant in this market with great potential.

Productionizing a data pipeline:

Now that you’ve put some work into developing your data pipeline, it’s time to test it in a production environment. For technical implementation, code versioning and application monitoring are crucial. CI/CD is also a consideration as it ensures that error handling and data control systems are always up-to-date. Considerations for businesses include Service-Level Agreements, cost optimizations and budget constraints.

Data security and privacy:

Once the pipelines are ready to be deployed, data engineers need to consider if there are going to be any data governance and privacy concerns that arise. Are there any policies or security standards that we need in place? Data governance and security is usually handled by a separate team. However, data engineers can help with automating some of the work that these departments carry out. For example, if you need to identify or delete/anonymize personal private data before serving the data to the end user, data engineers can embed and implement these requirements automatically in their pipelines.

Data Infrastructure:

All of the above will not happen without a solid data infrastructure. The two fundamental aspects there are compute and storage. Many organizations nowadays have their IT infrastructure in a cloud environment (AWS, Azure, and GCP) to provide better options for their data infrastructure. For compute, there are 4 different types of workloads and their corresponding engines — ETL orchestrations, data warehouse jobs, machine-learning algorithms and streaming processes. Usage of different engines for different types of workloads is popular. For example, Spark is the most popular framework for processing big data in parallel.

Storage:

Some popular methods of storing data are: – Cloud storage such as AWS S3, Azure blob storage and GCS. – Common formats for storing data when using cloud storage include Parquet, Avro, ORC, JSON and CSV. Newer storage formats, like Delta, Apache Hudi and Iceberg are gaining ground as they offer benefits in data management. For example, they help alleviate some of the key issues of data lakes, like lack of ACID transactions or lack of schema enforcement & any potential data corruption.

This is a very high-level introduction of the main components that data engineers will come across when building data pipelines. If you are looking to introduce Data Engineering in your organization, then feel free to connect with us.

With Chapter247’s support, we help you in implementing the key concepts and design considerations and more importantly, implement functions using different frameworks and languages. Connect Now!

With Chapter247’s support, we help you in implementing the key concepts and design considerations and more importantly, implement functions using different frameworks and languages. Connect Now!